Top-tier open-source video generation on VBench 2.0 with 12–18× faster inference. SOTA video editing — #1 on OpenVE-Bench, FiVE-Bench, and Reco-Bench, surpassing Kling O1.

Top-tier open-source video generation on VBench 2.0 with 12–18× faster inference. SOTA video editing — #1 on OpenVE-Bench, FiVE-Bench, and Reco-Bench, surpassing Kling O1.

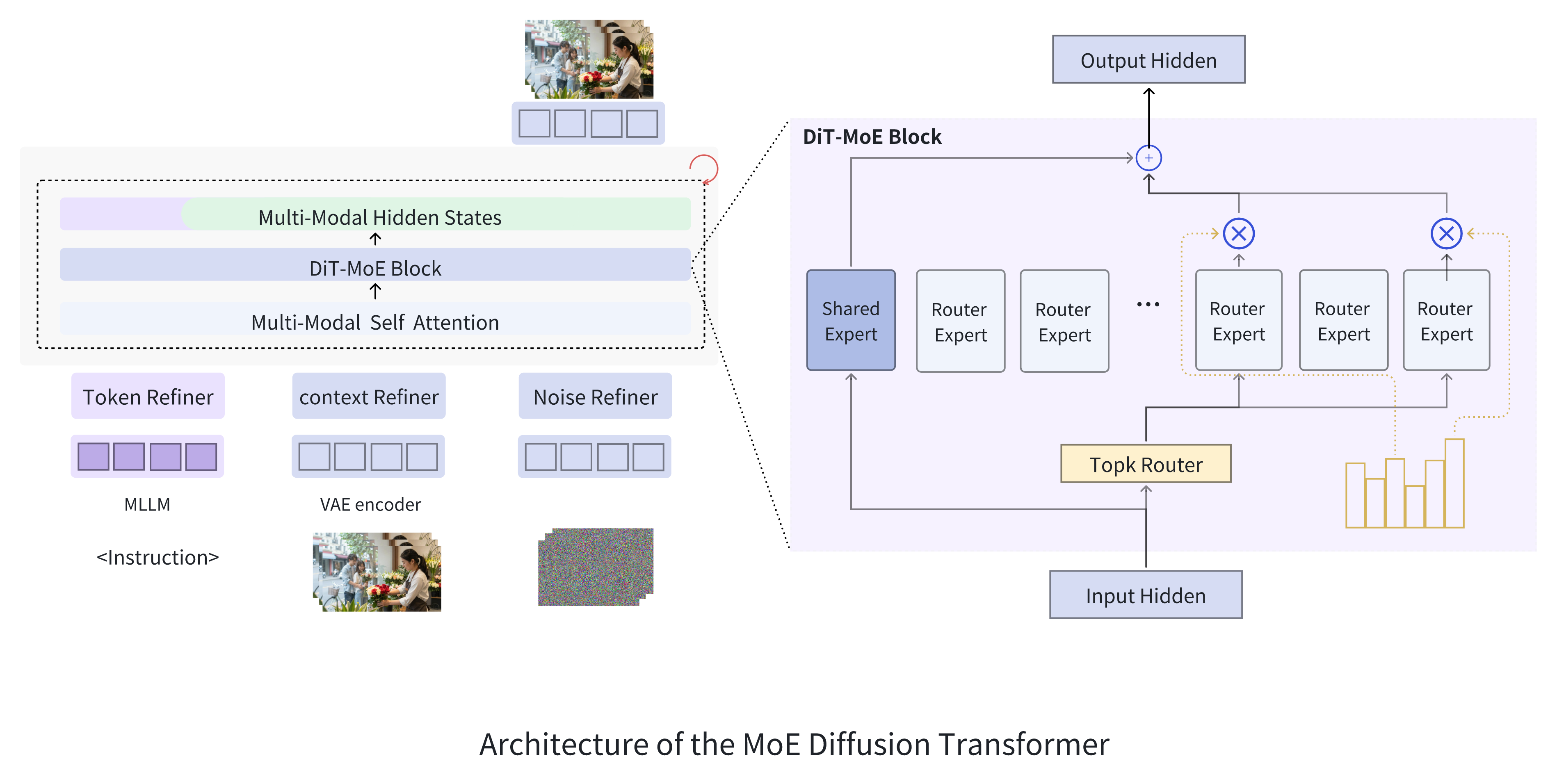

Mammoth2.5 employs a fine-grained Mixture-of-Experts (MoE) design with 128 routed experts and Top-8 routing to scale the DiT backbone to 25B total parameters while activating only ~3B per forward pass (~12%). This yields over 12× faster inference than Wan2.2 A14B on a single device, and ablation studies confirm a ~2.2× convergence speedup over dense models under matched activated parameters.

A single AR-Diffusion framework built on Qwen3-VL for multimodal understanding and an MoE DiT backbone for generation. One unified model supports text-to-image, text-to-video, image editing, and video editing, eliminating the need for separate task-specific models.

@article{mammoth2.5,

title={MammothModa2.5: A MoE Architecture for Unified Visual Generation and Editing},

author={MammothModa Team},

year={2025}

}

@article{shen2025mammothmoda2,

title={MammothModa2: A Unified AR-Diffusion Framework for Multimodal Understanding and Generation},

author={Shen, Tao and Wan, Xin and Chen, Taicai and Zhang, Rui and Pan, Junwen and Lu, Dawei and Lei, Fanding and Lu, Zhilin and Yang, Yunfei and Cheng, Chen and others},

journal={arXiv preprint arXiv:2511.18262},

year={2025}

}